OpenAI GPT-3 API错误:"This model's maximum context length is 4097 tokens";

我正在向 completions 端点发出请求。我的提示是1360个令牌,由Playground和Tokenizer验证。我不会显示提示,因为它对这个问题来说有点太长了。

这是我在Nodejs中使用openai npm包对openai提出的请求。

const response = await openai.createCompletion({

model: 'text-davinci-003',

prompt,

max_tokens: 4000,

temperature: 0.2

})

当在playground上测试时,我的响应后的总token是1374个。

通过完成 API 提交我的提示时,出现以下错误:

error: {

message: "This model's maximum context length is 4097 tokens, however you requested 5360 tokens (1360 in your prompt; 4000 for the completion). Please reduce your prompt; or completion length.",

type: 'invalid_request_error',

param: null,

code: null

}

如果您能够解决这个问题,我很想听听您是如何做到的。

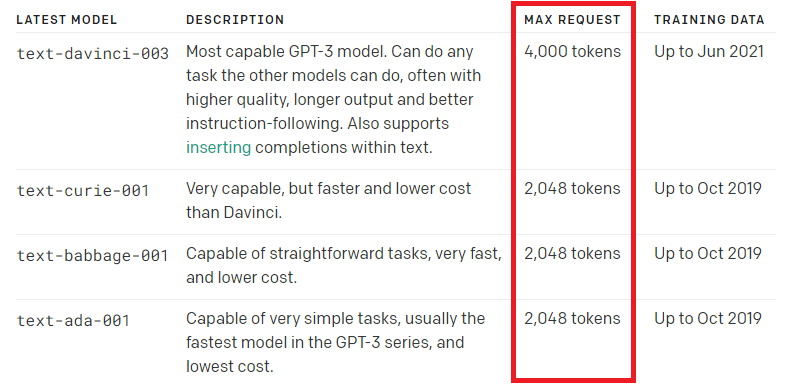

max_tokens参数在提示和完成之间是共享的。来自提示和完成的所有令牌不应超过特定GPT-3模型的令牌限制。

正如官方的OpenAI文章中所说:

Depending on the model used, requests can use up to

4097tokens shared between prompt and completion. If your prompt is4000tokens, your completion can be97tokens at most.The limit is currently a technical limitation, but there are often creative ways to solve problems within the limit, e.g. condensing your prompt, breaking the text into smaller pieces, etc.

这一点被Reddit用户 "bortlip"解决了。

参数max_tokens定义了响应的令牌。

来自OpenAI的消息:

https://platform.openai.com/docs/api-reference/completions/create#completions/create-max_tokens

The token count of your prompt plus max_tokens cannot exceed the model's context length.

因此,为了解决这个问题,我从max_tokens中减去提示的令牌计数,它工作得很好。